Without a good problem definition, you might put effort into solving the wrong problem.

Be sure you know what you’re aiming at before you shoot.

Stable Requirements

Checklist: Requirements

Specific Functional Requirements

Are all the inputs to the system specified, including their source, accuracy, range of values, and frequency?

Are all the outputs from the system specified, including their destination, accuracy, range of values, frequency, and format?

Are all output formats specified for web pages, reports, and so on?

Are all the external hardware and software interfaces specified?

Are all the external communication interfaces specified, including handshaking, error-checking, and communication protocols?

Are all the tasks the user wants to perform specified?

Is the data used in each task and the data resulting from each task specified?

Specific Non-Functional (Quality) Requirements

Is the expected response time, from the user’s point of view, specified for all necessary operations?

Are other timing considerations specified, such as processing time, data-transfer rate, and system throughput?

Is the level of security specified?

Is the reliability specified, including the consequences of software failure, the vital information that needs to be protected from failure, and the strategy for error detection and recovery?

Is maximum memory specified?

Is the maximum storage specified?

Is the maintainability of the system specified, including its ability to adapt to changes in specific functionality, changes in the operating environment, and changes in its interfaces with other software?

Is the definition of success included? Of failure?

Requirements Quality

Are the requirements written in the user’s language? Do the users think so? Does each requirement avoid conflicts with other requirements?

Are acceptable trade-offs between competing attributes specified—for example, between robustness and correctness?

Do the requirements avoid specifying the design?

Are the requirements at a fairly consistent level of detail? Should any requirement be specified in more detail? Should any requirement be specified in less detail?

Are the requirements clear enough to be turned over to an independent group for construction and still be understood?

Is each item relevant to the problem and its solution? Can each item be traced to its origin in the problem environment?

Is each requirement testable? Will it be possible for independent testing to determine whether each requirement has been satisfied?

Are all possible changes to the requirements specified, including the likelihood of each change?

Requirements Completeness

Where information isn’t available before development begins, are the areas of incompleteness specified?

Are the requirements complete in the sense that if the product satisfies every requirement, it will be acceptable?

Are you comfortable with all the requirements? Have you eliminated requirements that are impossible to implement and included just to appease your customer or your boss?

Typical Architectural Components

Program Organization

A system architecture first needs an overview that describes the system in broad terms.

In the architecture, you should find evidence that alternatives to the final organization were considered and find the reasons the organization used was chosen over the alternatives.

One review of design practices found that the design rationale is at least as important for maintenance as the design itself.

Every feature listed in the requirements should be covered by at least one building block. If a function is claimed by two or more building blocks, their claims should cooperate, not conflict.

Major Classes

The architecture should specify the major classes to be used. It should identify the responsibilities of each major class and how the class will interact with other classes.

It should include descriptions of the class hierarchies, of state transitions, and of object persistence.

If the system is large enough, it should describe how classes are organized into subsystems.

The architecture should describe other class designs that were considered and give reasons for preferring the organization that was chosen.

The architecture doesn’t need to specify every class in the system; aim for the 80/20 rule: specify the 20 percent of the classes that make up 80 percent of the systems’ behavior.

Data Design

The architecture should describe the major files and table designs to be used.

User Interface Design

Sometimes the user interface is specified at requirements time.

If it isn’t, it should be specified in the software architecture. The architecture should specify major elements of web page formats, GUIs, command line interfaces, and so on.

Careful architecture of the user interface makes the difference between a well- liked program and one that’s never used.

Input/Output

Input/output is another area that deserves attention in the architecture.

The architecture should specify a look-ahead, look-behind, or just-in-time reading scheme.

And it should describe the level at which I/O errors are detected: at the field, record, stream, or file level.

Resource Management

The architecture should describe a plan for managing scarce resources such as database connections, threads, and handles.

Memory management is another important area for the architecture to treat in memory-constrained applications areas like driver development and embedded systems.

Security

The architecture should describe the approach to design-level and code-level security.

Performance

If performance is a concern, performance goals should be specified in the requirements.

Performance goals can include both speed and memory use.

Scalability

Scalability is the ability of a system to grow to meet future demands.

The architecture should describe how the system will address growth in number of users, number of servers, number of network nodes, database size, transaction volume, and so on.

Interoperability

If the system is expected to share data or resources with other software or hardware, the architecture should describe how that will be accomplished.

Internationalization / Localization

“Internationalization” is the technical activity of preparing a program to support multiple locales.

Internationalization is often known as “I18N” because the first and last characters in “internationalization” are “I” and “N” and because there are 18 letters in the middle of the word.

“Localization” (known as “L10n” for the same reason) is the activity of translating a program to support a specific local language.

If the program is to be used commercially, the architecture should show that the typical string and character-set issues have been considered, including character set used (ASCII, DBCS, EBCDIC, MBCS, Uni- code, ISO 8859, and so on), kinds of strings used (C strings, Visual Basic Strings, and so on) maintaining the strings without changing code, and translating the strings into foreign languages with minimal impact on the code and the user interface.

The architecture can decide to use strings in line in the code where they’re needed, keep the strings in a class and reference them through the class interface, or store the strings in a resource file.

Error Processing

Error handling is often treated as a coding-convention–level issue, if it’s treated at all.

But because it has system-wide implications, it is best treated at the archi- tectural level. Here are some questions to consider:

Is error processing corrective or merely detective?

If corrective, the program can attempt to recover from errors.

If it’s merely detective, the program can continue processing as if nothing had happened, or it can quit.

In either case, it should notify the user that it detected an error.Is error detection active or passive?

The system can actively anticipate errors—for example, by checking user input for validity—or it can passively respond to them only when it can’t avoid them—for example, when a combination of user input produces a numeric overflow.

It can clear the way or clean up the mess. Again, in either case, the choice has user-interface implications.How does the program propagate errors?

Once it detects an error, it can immediately discard the data that caused the error, it can treat the error as an error and enter an error-processing state, or it can wait until all processing is complete and notify the user that errors were detected (somewhere).What are the conventions for handling error messages?

If the architecture doesn’t specify a single, consistent strategy, the user interface will appear to be a confusing macaroni-and-dried-bean collage of different interfaces in different parts of the program.

To avoid such an appearance, the architecture should establish conventions for error messages.Inside the program, at what level are errors handled?

You can handle them at the point of detection, pass them off to an error-handling class, or pass them up the call chain.What is the level of responsibility of each class for validating its input data?

Is each class responsible for validating its own data, or is there a group of classes responsible for validating the system’s data?

Can classes at any level assume that the data they’re receiving is clean?Do you want to use your environment’s built-in exception handling mechanism, or build your own?

The fact that an environment has a particular error-handling approach doesn’t mean that it’s the best approach for your requirements.

Fault Tolerance

Fault tolerance is a collection of techniques that increase a system’s reliability by detecting errors, recovering from them if possible, and containing their bad effects if not.

For example, a system could make the computation of the square root of a number fault tolerant in any of several ways:

The system might back up and try again when it detects a fault.

If the first answer is wrong, it would back up to a point at which it knew everything was all right and continue from there.The system might have auxiliary code to use if it detects a fault in the primary code.

In the example, if the first answer appears to be wrong, the system switches over to an alternative square-root routine and uses it instead.The system might use a voting algorithm.

It might have three square-root classes that each use a different method.

Each class computes the square root, and then the system compares the results.

Depending on the kind of fault tolerance built into the system, it then uses the mean, the median, or the mode of the three results.The system might replace the erroneous value with a phony value that it knows to have a benign effect on the rest of the system.

Other fault-tolerance approaches include having the system change to a state of partial operation or a state of degraded functionality when it detects an error.

It can shut itself down or automatically restart itself.

Architectural Feasibility

The architecture should demonstrate that the system is technically feasible.

Overengineering

Robustness is the ability of a system to continue to run after it detects an error.

In software, the chain isn’t as strong as its weakest link; it’s as weak as all the weak links multiplied together.

The architecture should clearly indicate whether programmers should err on the side of overengineering or on the side of doing the simplest thing that works.

Buy-vs.-Build Decisions

If the architecture isn’t using off-the-shelf components, it should explain the ways in which it expects custom-built components to surpass ready-made libraries and components.

Reuse Decisions

Change Strategy

The architecture should clearly describe a strategy for handling changes.

The architecture should show that possible enhancements have been considered and that the enhancements most likely are also the easiest to implement.

The architecture’s plan for changes can be as simple as one to put version numbers in data files, reserve fields for future use, or design files so that you can add new tables.

It might specify that data for the table is to be kept in an external file rather than coded inside the program, thus allowing changes in the program without recompiling.

General Architectural Quality

The architecture should describe the motivations for all major decisions.

Be wary of “we’ve always done it that way” justifications.

The architecture should tread the line between under-specifying and over- specifying the system. No part of the architecture should receive more attention than it deserves, or be over-designed.

Designers shouldn’t pay attention to one part at the expense of another.

The architecture should address all requirements without gold-plating (without containing elements that are not required).

The architecture should explicitly identify risky areas.

It should explain why they’re risky and what steps have been taken to minimize the risk.

Finally, you shouldn’t be uneasy about any parts of the architecture.

It shouldn’t contain anything just to please the boss. It shouldn’t contain anything that’s hard for you to understand.

Checklist: Architecture

Specific Architectural Topics

Is the overall organization of the program clear, including a good architectural overview and justification?

Are major building blocks well defined, including their areas of responsibility and their interfaces to other building blocks?

Are all the functions listed in the requirements covered sensibly, by neither too many nor too few building blocks?

Are the most critical classes described and justified?

Is the data design described and justified?

Is the database organization and content specified?

Are all key business rules identified and their impact on the system described?

Is a strategy for the user interface design described?

Is the user interface modularized so that changes in it won’t affect the rest of the program?

Is a strategy for handling I/O described and justified?

Are resource-use estimates and a strategy for resource management described and justified?

Are the architecture’s security requirements described?

Does the architecture set space and speed budgets for each class, subsystem, or functionality area?

Does the architecture describe how scalability will be achieved? Does the architecture address interoperability?

Is a strategy for internationalization/localization described?

Is a coherent error-handling strategy provided?

Is the approach to fault tolerance defined (if any is needed)?

Has technical feasibility of all parts of the system been established?

Is an approach to overengineering specified?

Are necessary buy-vs.-build decisions included?

Does the architecture describe how reused code will be made to conform to other architectural objectives?

Is the architecture designed to accommodate likely changes?

Does the architecture describe how reused code will be made to conform to other architectural objectives?

General Architectural Quality

Does the architecture account for all the requirements?

Is any part over- or under-architected? Are expectations in this area set out explicitly?

Does the whole architecture hang together conceptually?

Is the top-level design independent of the machine and language that will be used to implement it?

Are the motivations for all major decisions provided?

Are you, as a programmer who will implement the system, comfortable with the architecture?

Amount of Time to Spend on Upstream Prerequisites

Generally, a well-run project devotes about 10 to 20 percent of its effort and about 20 to 30 percent of its schedule to requirements, architecture, and up-front planning.

These figures don’t include time for detailed design— that’s part of construction.

If requirements are unstable and you’re working on a large, formal project, you’ll probably have to work with a requirements analyst to resolve requirements problems that are identified early in construction.

Allow time to consult with the requirements analyst and for the requirements analyst to revise the requirements before you’ll have a workable version of the requirements.

If requirements are unstable and you’re working on a small, informal project, allow time for defining the requirements well enough that their volatility will have a minimal impact on construction.

If the requirements are unstable on any project—formal or informal—treat requirements work as its own project.

Estimate the time for the rest of the project after you’ve finished the requirements.

The clients you work with might not immediately understand why you want to plan requirements development as a separate project.

You might need to explain your reasoning to them.

Checklist: Upstream Prerequisites

Have you identified the kind of software project you’re working on and tailored your approach appropriately?

Are the requirements sufficiently well-defined and stable enough to begin construction (see the requirements checklist for details)?

Is the architecture sufficiently well defined to begin construction (see the architecture checklist for details)?

Have other risks unique to your particular project been addressed, such that construction is not exposed to more risk than necessary?

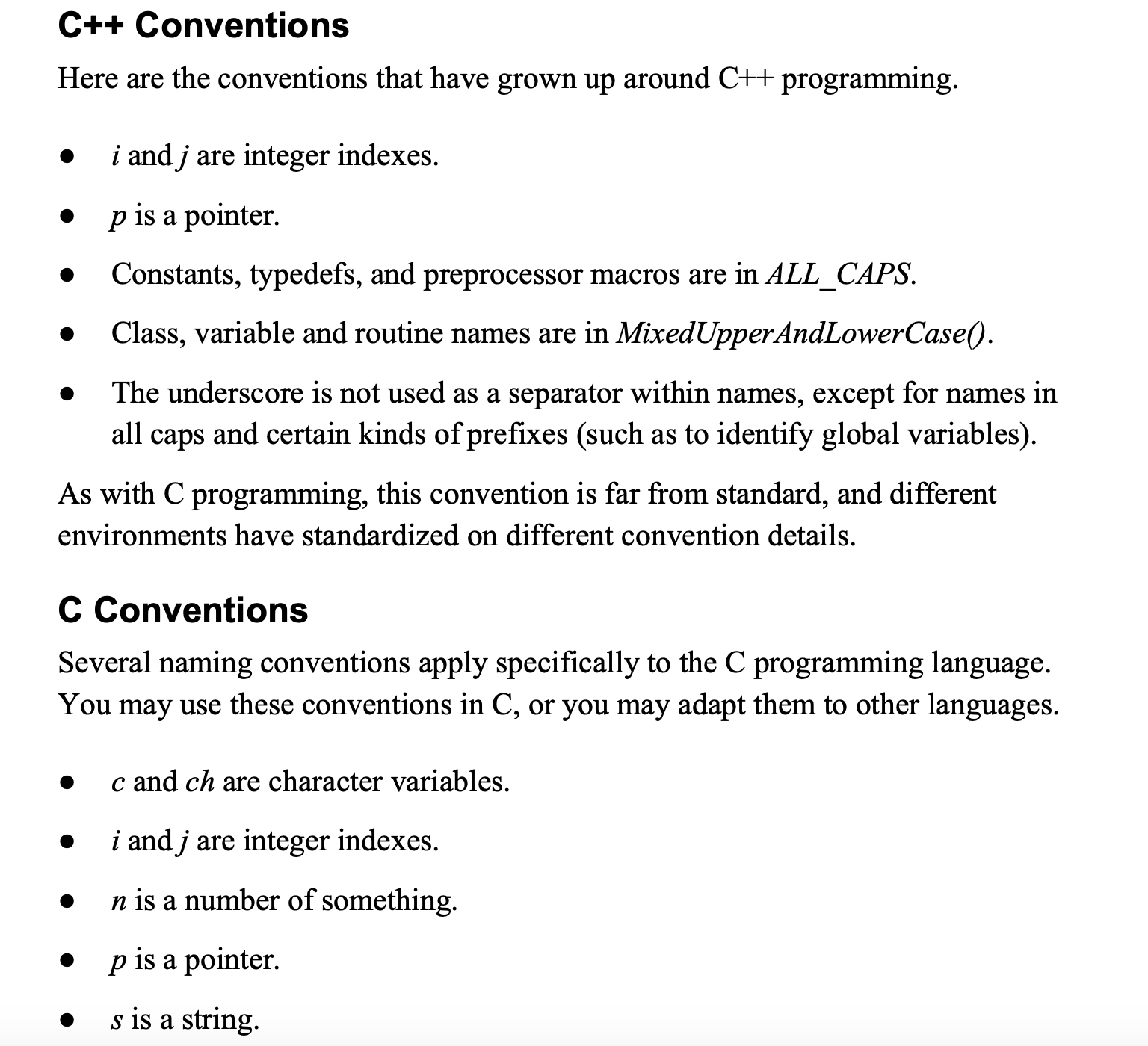

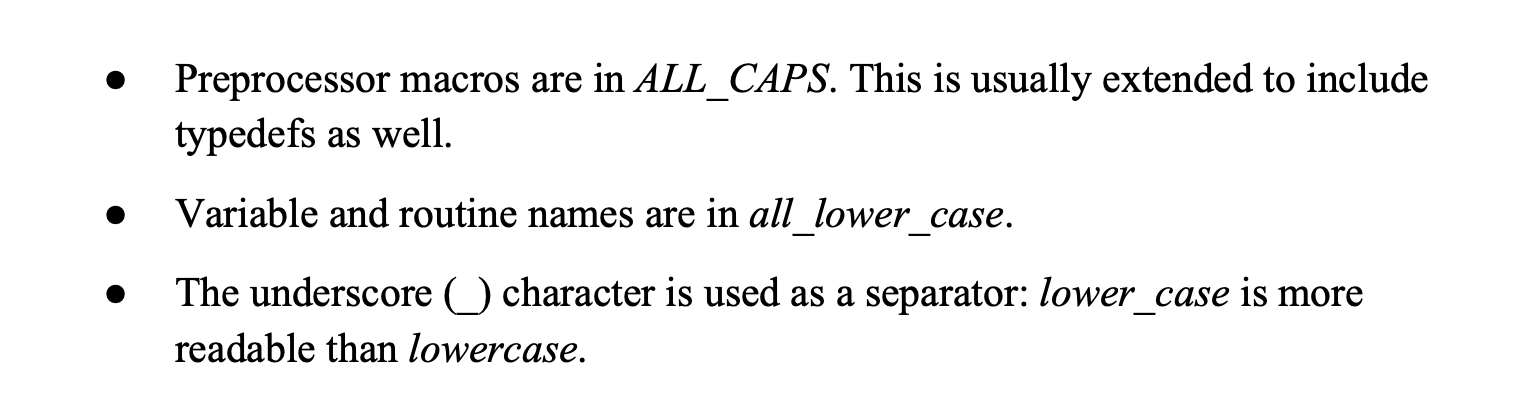

Choice of Programming Language

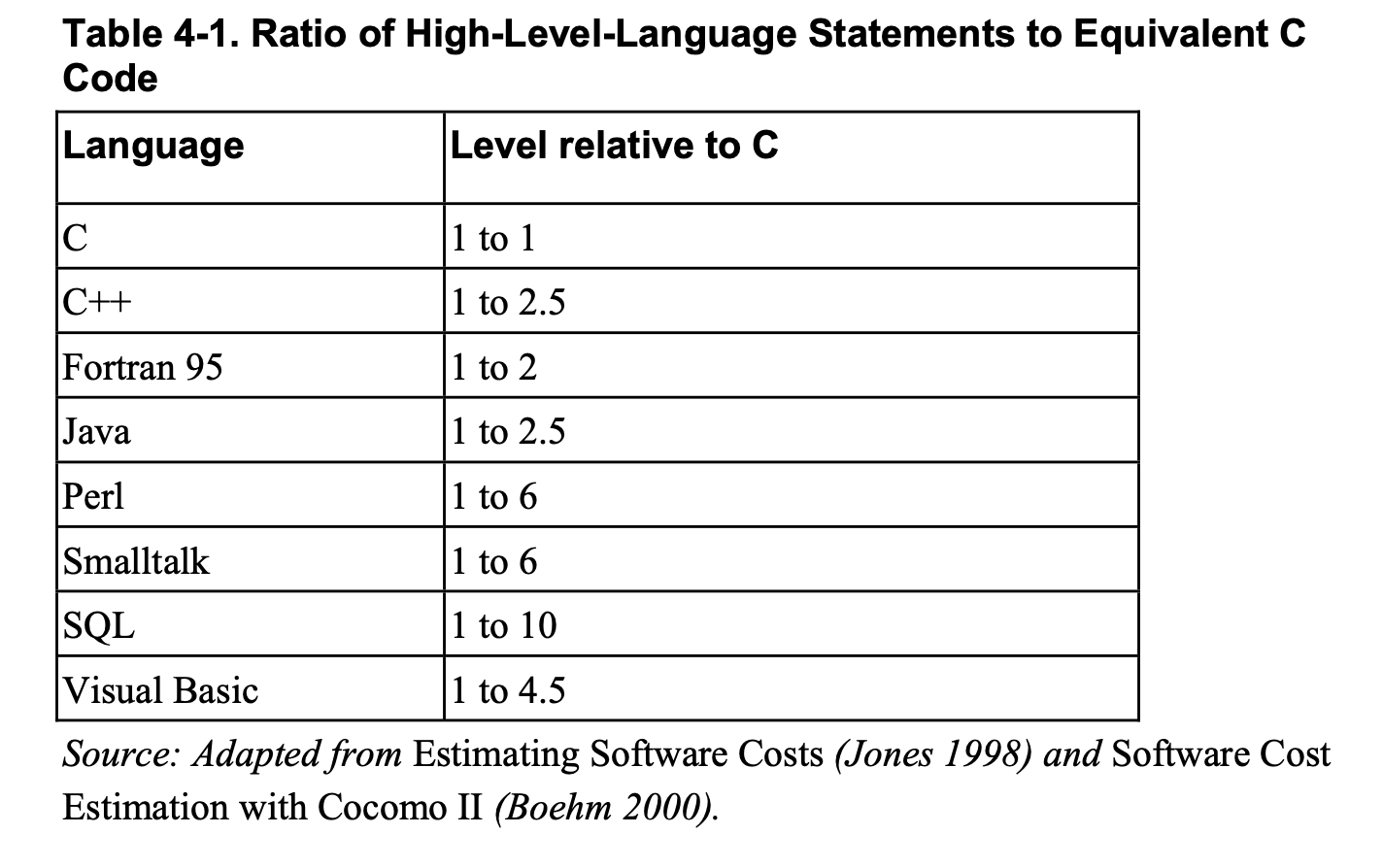

Programmers working with high-level languages achieve better productivity and quality than those working with lower-level languages.

Languages such as C++, Java, Smalltalk, and Visual Basic have been credited with improving productivity, reliability, simplicity, and comprehensibility by factors of 5 to 15 over low-level languages such as assembly and C (Brooks 1987, Jones 1998, Boehm 2000).

You save time when you don’t need to have an awards ceremony every time a C statement does what it’s supposed to.

Moreover, higher-level languages are more expressive than lower-level languages. Each line of code says more.

Programmers may be similarly influenced by their languages.

The words available in a programming language for expressing your programming thoughts certainly determine how you express your thoughts and might even determine what thoughts you can express.

Evidence of the effect of programming languages on programmers’ thinking is common.

If your language lacks constructs that you want to use or is prone to other kinds of problems, try to compensate for them. Invent your own coding conventions, standards, class libraries, and other augmentations.

Checklist: Major Construction Practices

Coding

Have you defined coding conventions for names, comments, and formatting?

Have you defined specific coding practices that are implied by the architecture, such as how error conditions will be handled, how security will be addressed, and so on?

Have you identified your location on the technology wave and adjusted your approach to match? If necessary, have you identified how you will program into the language rather than being limited by programming in it?

Teamwork

Have you defined an integration procedure, that is, have you defined the specific steps a programmer must go through before checking code into the master sources?

Will programmers program in pairs, or individually, or some combination of the two?

Quality Assurance

Will programmers write test cases for their code before writing the code itself?

Will programmers write unit tests for the their code regardless of whether they write them first or last?

Will programmers step through their code in the debugger before they check it in?

Will programmers integration-test their code before they check it in?

Will programmers review or inspect each others’ code?

Tools

Have you selected a revision control tool?

Have you selected a language and language version or compiler version?

Have you decided whether to allow use of non-standard language features?

Have you identified and acquired other tools you’ll be using—editor, refactoring tool, debugger, test framework, syntax checker, and so on?

- Every programming language has strengths and weaknesses. Be aware of the specific strengths and weaknesses of the language you’re using.

- Establish programming conventions before you begin programming. It’s nearly impossible to change code to match them later.

- More construction practices exist than you can use on any single project. Consciously choose the practices that are best suited to your project.

- Your position on the technology wave determines what approaches will be effective—or even possible. Identify where you are on the technology wave, and adjust your plans and expectations accordingly.

Design in Construction

Design is a Wicked Problem.

Horst Rittel and Melvin Webber defined a “wicked” problem as one that could be clearly defined only by solving it, or by solving part of it (1973).

This paradox implies, essentially, that you have to “solve” the problem once in order to clearly define it and then solve it again to create a solution that works.

Design is sloppy because you take many false steps and go down many blind alleys—you make a lot of mistakes.

Indeed, making mistakes is the point of design—it’s cheaper to make mistakes and correct designs that it would be to make the same mistakes, recognize them later, and have to correct full-blown code.

Design is a Heuristic Process.

Because design is non-deterministic, design techniques tend to be “heuristics”— ”rules of thumb” or “things to try that sometimes work,” rather than repeatable processes that are guaranteed to produce predictable results. Design involves trial and error.

A tidy way of summarizing these attributes of design is to say that design is “emergent” (Bain and Shalloway 2004).

Designs don’t spring fully formed directly from someone’s brain.

They evolve and improve through design reviews, informal discussions, experience writing the code itself, and experience revising the code itself.

Accidental and Essential Difficulties

Brooks argues that software development is made difficult because of two different classes of problems—the essential and the accidental.

In philosophy, the essential properties are the properties that a thing must have in order to be that thing.

A car must have an engine, wheels, and doors to be a car. If it doesn’t have any of those essential properties, then it isn’t really a car.

Accidental properties are the properties a thing just happens to have, that don’t really bear on whether the thing is really that kind of thing.

A car could have a V8, a turbocharged 4-cylinder, or some other kind of engine and be a car regardless of that detail.

You could also think of accidental properties as coincidental, discretionary, optional, and happenstance.

Importance of Managing Complexity

When projects do fail for reasons that are primarily technical, the reason is often uncontrolled complexity.

The software is allowed to grow so complex that no one really knows what it does.

When a project reaches the point at which no one really understands the impact that code changes in one area will have on other areas, progress grinds to a halt.

We should try to organize our programs in such a way that we can safely focus on one part of it at a time.

The goal is to minimize the amount of a program you have to think about at any one time.

Desirable Characteristics of a Design

There are three sources of overly costly, ineffective designs:

This suggests a two-prong approach to managing complexity:

- Minimize the amount of essential complexity that anyone’s brain has to deal with at any one time.

- Keep accidental complexity from needlessly proliferating.

Here’s a list of internal design characteristics:

Minimal complexity

The primary goal of design should be to minimize complexity for all the reasons described in the last section. Avoid making “clever” designs.

Clever designs are usually hard to understand. Instead make “simple” and “easy-to-understand” designs.

Ease of maintenance

Ease of maintenance means designing for the maintenance programmer.

Continually imagine the questions a maintenance programmer would ask about the code you’re writing.

Design the system to be self-explanatory.

Minimal connectedness

Use the principles of strong cohesion, loose coupling, and information hiding to design classes with as few interconnections as possible.

Minimal connectedness minimizes work during integration, testing, and maintenance.

Extensibility

Reusability

High fan-in

High fan-in refers to having a high number of classes that use a given class.

High fan-in implies that a system has been designed to make good use of utility classes at the lower levels in the system.

Low-to-medium fan-out

Low-to-medium fan-out means having a given class use a low-to-medium number of other classes.

High fan-out (more than about seven) indicates that a class uses a large number of other classes and may therefore be overly complex.

Researchers have found that the principle of low fan out is beneficial whether you’re considering the number of routines called from within a routine or from within a class.

Portability

Portability means designing the system so that you can easily move it to another environment.

Leanness

Leanness means designing the system so that it has no extra parts.

Voltaire said that a book is finished not when nothing more can be added but when nothing more can be taken away.

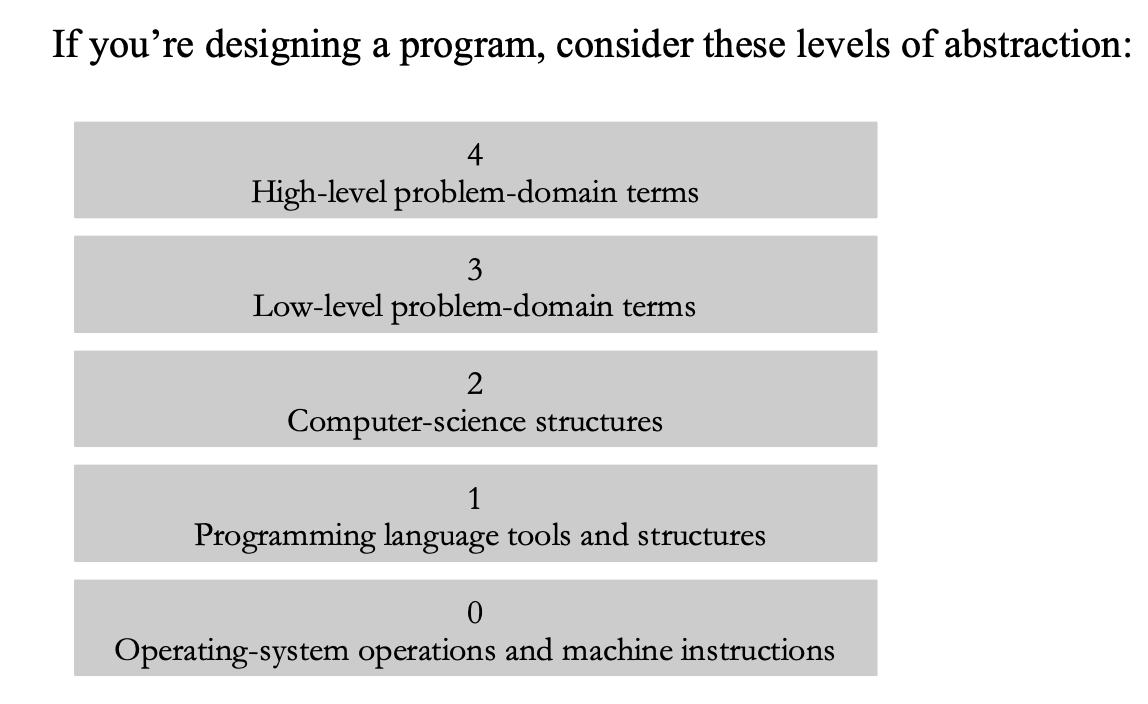

Stratification

Stratified design means trying to keep the levels of decomposition stratified so that you can view the system at any single level and get a consistent view.

Design the system so that you can view it at one level without dipping into other levels.

If you’re writing a modern system that has to use a lot of older, poorly designed code, write a layer of the new system that’s responsible for interfacing with the old code.

The beneficial effects of stratified design in such a case are (1) it compartmentalizes the messiness of the bad code and (2) if you’re ever allowed to jettison the old code, you won’t need to modify any new code except the interface layer.

Standard techniques

Try to give the whole system a familiar feeling by using standardized, common approaches.

Levels of Design

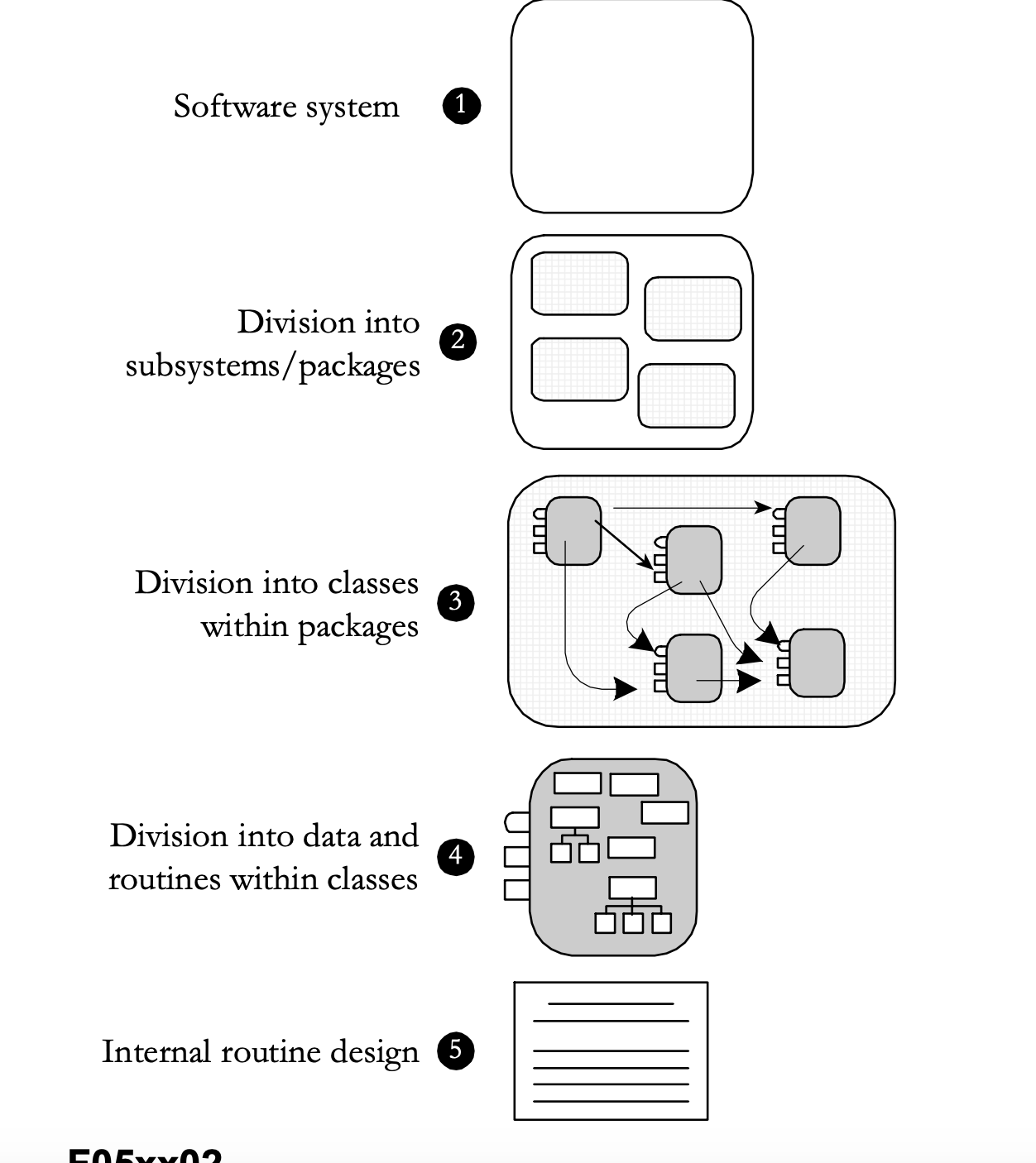

Level 1: Software System

The first level is the entire system. Some programmers jump right from the system level into designing classes, but it’s usually beneficial to think through higher level combinations of classes, such as subsystems or packages.

Level 2: Division into Subsystems or Packages

The main product of design at this level is the identification of all major subsystems.

The subsystems can be big—database, user interface, business logic, command interpreter, report engine, and so on.

The major design activity at this level is deciding how to partition the program into major subsystems and defining how each subsystem is allowed to use each other subsystems.

Of particular importance at this level are the rules about how the various subsystems can communicate.

If all subsystems can communicate with all other subsystems, you lose the benefit of separating them at all. Make the subsystem meaningful by restricting communications.

On large programs and families of programs, design at the subsystem level makes a difference.

If you believe that your program is small enough to skip subsystem-level design, at least make the decision to skip that level of design a conscious one.

Common Subsystems

- Business logic

Business logic is the laws, regulations, policies, and procedures that you encode into a computer system.

If you’re writing a payroll system, you might encode rules from the IRS about the number of allowable withholdings and the estimated tax rate.

- User interface

Create a subsystem to isolate user-interface components so that the user interface can evolve without damaging the rest of the program. I

n most cases, a user- interface subsystem uses several subordinate subsystems or classes for GUI interface, command line interface, menu operations, window management, help system, and so forth.

- Database access

Subsystems that hide implementation details provide a valuable level of abstraction that reduces a program’s complexity.

You can hide the implementation details of accessing a database so that most of the program doesn’t need to worry about the messy details of manipulating low- level structures and can deal with the data in terms of how it’s used at the business-problem level.

- System dependencies

Level 3: Division into Classes

Design at this level includes identifying all classes in the system.

For example, a database-interface subsystem might be further partitioned into data access classes and persistence framework classes as well as database meta data.

Level 4: Division into Routines

The class interface defined at Level 3 will define some of the routines.

Design at Level 4 will detail the class’s private routines.

When you examine the details of the routines inside a class, you can see that many routines are simple boxes, but a few are composed of hierarchically organized routines, which require still more design.

Level 5: Internal Routine Design

Design at the routine level consists of laying out the detailed functionality of the individual routines.

Internal routine design is typically left to the individual programmer working on an individual routine.

The design consists of activities such as writing pseudocode, looking up algorithms in reference books, deciding how to organize the paragraphs of code in a routine, and writing programming- language code.

Find Real-World Objects

The steps in designing with objects are

• Identify the objects and their attributes (methods and data).

• Determine what can be done to each object.

• Determine what each object can do to other objects.

• Determine the parts of each object that will be visible to other objects— which parts will be public and which will be private.

• Define each object’s public interface.

Form Consistent Abstractions

Abstraction is the ability to engage with a concept while safely ignoring some of its details— handling different details at different levels.

Base classes are abstractions that allow you to focus on common attributes of a set of derived classes and ignore the details of the specific classes while you’re working on the base class.

A good class interface is an abstraction that allows you to focus on the interface without needing to worry about the internal workings of the class.

Encapsulate Implementation Details

Abstraction says, “You’re allowed to look at an object at a high level of detail.”

Encapsulation says, “Furthermore, you aren’t allowed to look at an object at any other level of detail.”

Inherit When Inheritance Simplifies the Design

Hide Secrets (Information Hiding)

In structured design, the notion of “black boxes” comes from information hiding.

In object-oriented design, it gives rise to the concepts of encapsulation and modularity, and it is associated with the concept of abstraction.

Secrets and the Right to Privacy

One key task in designing a class is deciding which features should be known outside the class and which should remain secret.

Designing the class interface is an iterative process just like any other aspect of design.

If you don’t get the interface right the first time, try a few more times until it stabilizes. If it doesn’t stabilize, you need to try a different approach.

Information hiding is useful at all levels of design, from the use of named constants instead of literals, to creation of data types, to class design, routine design, and subsystem design.

Excessive Distribution Of Information

One common barrier to information hiding is an excessive distribution of information throughout a system. You might have hard-coded the literal 100 throughout a system. Using 100 as a literal decentralizes references to it. It’s better to hide the information in one place, in a constant MAX_EMPLOYEES perhaps, whose value is changed in only one place.

Another example of excessive information distribution is interleaving interaction with human users throughout a system. If the mode of interaction changes—say, from a GUI interface to a command-line interface—virtually all the code will have to be modified. It’s better to concentrate user interaction in a single class, package, or subsystem you can change without affecting the whole system.

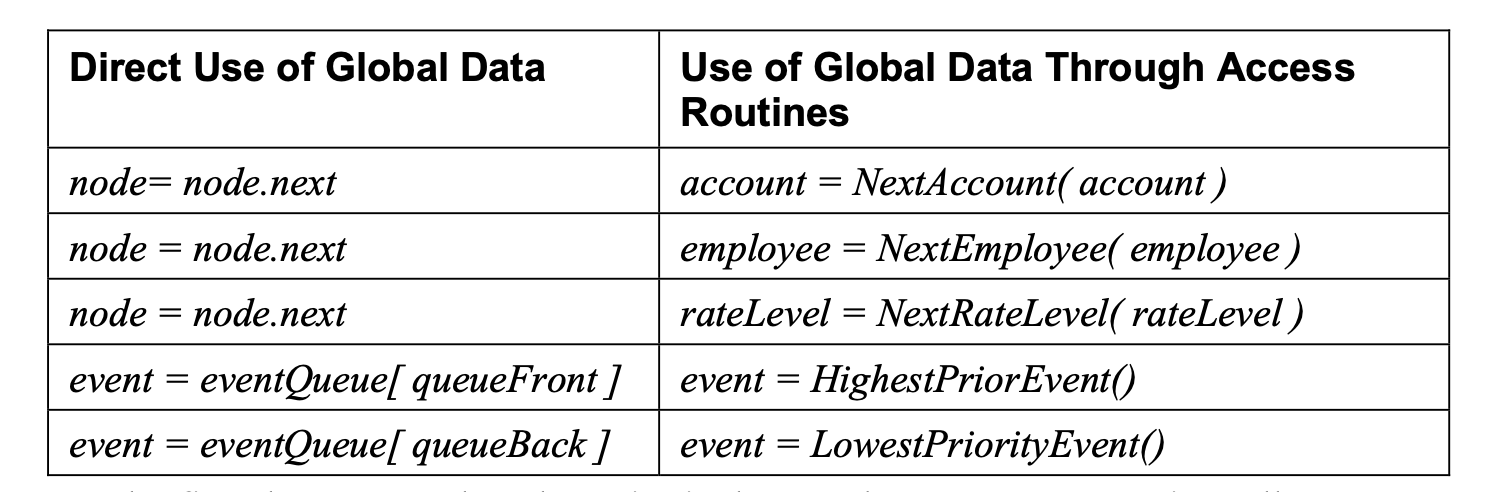

Yet another example would be a global data element—perhaps an array of employee data with 1000 elements maximum that’s accessed throughout a program. If the program uses the global data directly, information about the data item’s implementation—such as the fact that it’s an array and has a maximum of 1000 elements—will be spread throughout the program. If the program uses the data only through access routines, only the access routines will know the implementation details.

Circular Dependencies

A more subtle barrier to information hiding is circular dependencies, as when a routine in class A calls a routine in class B, and a routine in class B calls a routine in class A.

Avoid such dependency loops.

Class Data Mistaken For Global Data

Global data is generally subject to two problems: (1) Routines operate on global data without knowing that other routines are operating on it; and (2) routines are aware that other routines are operating on the global data, but they don’t know exactly what they’re doing to it.

Perceived Performance Penalties

A final barrier to information hiding can be an attempt to avoid performance penalties at both the architectural and the coding levels. You don’t need to worry at either level.

Value of Information Hiding

The difference between object-oriented design and information hiding in this example is more subtle than a clash of explicit rules and regulations.

Object- oriented design would approve of this design decision as much as information hiding would.

Rather, the difference is one of heuristics—thinking about information hiding inspires and promotes design decisions that thinking about objects does not.

Identify Areas Likely to Change

- Identify items that seem likely to change.

- Separate items that are likely to change.

- Isolate items that seem likely to change.

Here are a few areas that are likely to change:

- Business logic

- Hardware dependencies

- Input and output

- Nonstandard language features

- Difficult design and construction areas

It’s a good idea to hide difficult design and construction areas because they might be done poorly and you might need to do them again.

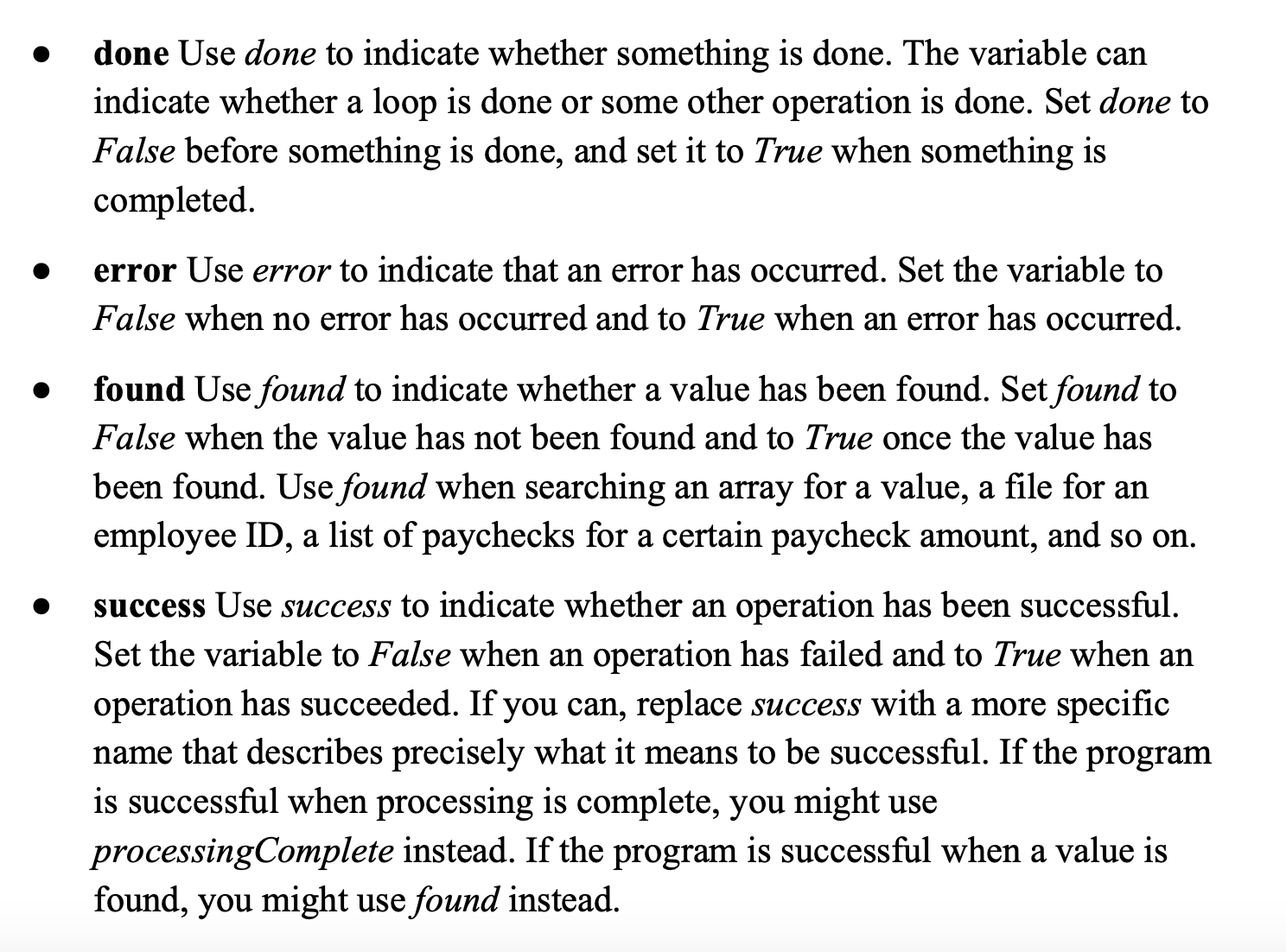

- Status variables

You can add at least two levels of flexibility and readability to your use of status variables:

Don’t use a boolean variable as a status variable. Use an enumerated type instead.

It’s common to add a new state to a status variable, and adding a new type to an enumerated type requires a mere recompilation rather than a major revision of every line of code that checks the variable.

Use access routines rather than checking the variable directly.

By checking the access routine rather than the variable, you allow for the possibility of more sophisticated state detection.

For example, if you wanted to check combinations of an error-state variable and a current-function-state variable, it would be easy to do if the test were hidden in a routine and hard to do if it were a complicated test hard-coded throughout the program.

- Data-size constraints

Anticipating Different Degrees of Change

A good technique for identifying areas likely to change is first to identify the minimal subset of the program that might be of use to the user.

The subset makes up the core of the system and is unlikely to change.

Next, define minimal increments to the system. They can be so small that they seem trivial.

These areas of potential improvement constitute potential changes to the system; design these areas using the principles of information hiding.

Keep Coupling Loose

Coupling describes how tightly a class or routine is related to other classes or routines.

The goal is to create classes and routines with small, direct, visible, and flexible relations to other classes and routines (loose coupling).

Coupling Criteria

- Size

Size refers to the number of connections between modules.

With coupling, small is beautiful because it’s less work to connect other modules to a module that has a smaller interface.

- Visibility

Visibility refers to the prominence of the connection between two modules.

You get lots of credit for making your connections as blatant as possible.

Passing data in a parameter list is making an obvious connection and is therefore good.

Modifying global data so that another module can use that data is a sneaky connection and is therefore bad.

Documenting the global-data connection makes it more obvious and is slightly better.

- Flexibility

Flexibility refers to how easily you can change the connections between modules.

Coupling Criteria

- Simple-data-parameter coupling

Two modules are simple-data-parameter coupled if all the data passed between them are of primitive data types and all the data is passed through parameter lists.

This kind of coupling is normal and acceptable.

- Simple-object coupling

A module is simple-object coupled to an object if it instantiates that object.

This kind of coupling is fine.

- Object-parameter coupling

Two modules are object-parameter coupled to each other if Object1 requires Object2 to pass it an Object3.

This kind of coupling is tighter than Object1 requiring Object2 to pass it only primitive data types.

- Semantic coupling

Here are some examples:

- Module1 passes a control flag to Module2 that tells Module2 what to do. This approach requires Module1 to make assumptions about the internal workings of Module2, namely, what Module2 is going to with the control flag. If Module2 defines a specific data type for the control flag (enumerated type or object), this usage is probably OK.

- Module2 uses global data after the global data has been modified by Module1. This approach requires Module2 to assume that Module1 has modified the data in the ways Module2 needs it to be modified, and that Module1 has been called at the right time.

- Module1’s interface states that its Module1.Initialize() routine should be called before its Module1.Routine1() is called. Module2 knows that Module1.Routine1() calls Module1.Initialize() anyway, so it just instantiates Module1 and calls Module1.Routine1() without calling Module1.Initialize() first.

- Module1 passes Object to Module2. Because Module1 knows that Module2 uses only three of Object’s seven methods, it only initializes Object only partially—with the specific data those three methods need.

- Module1 passes BaseObject to Module2. Because Module2 knows that Module2 is really passing it DerivedObject, it casts BaseObject to DerivedObject and calls methods that are specific to DerivedObject.

DerivedClass modifies BaseClass’s protected member data directly.

Semantic coupling is dangerous because changing code in the used module can break code in the using module in ways that are completely undetectable by the compiler.

The point of loose coupling is that an effective module provides an additional level of abstraction—once you write it, you can take it for granted.

It reduces overall program complexity and allows you to focus on one thing at a time.

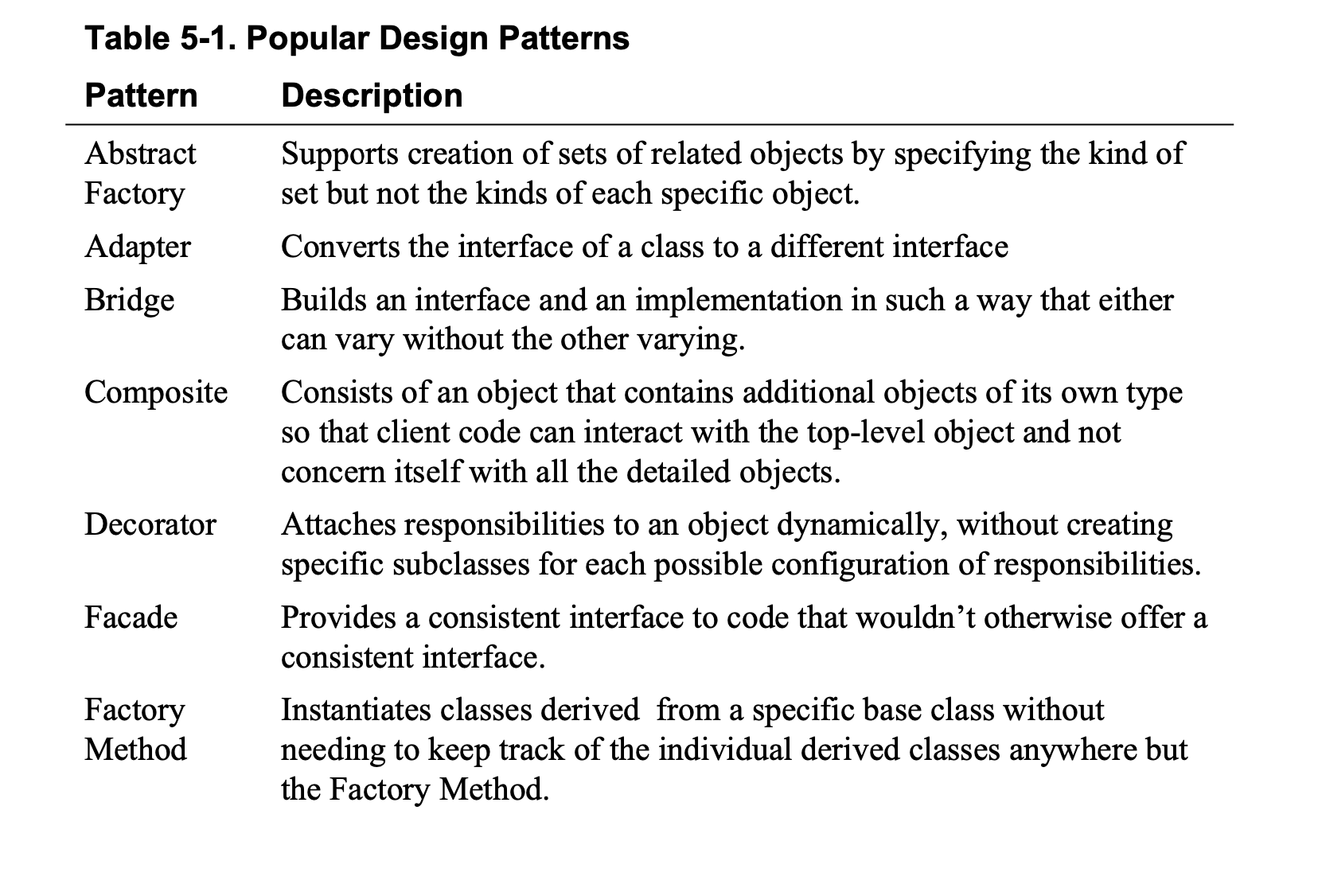

Look for Common Design Patterns

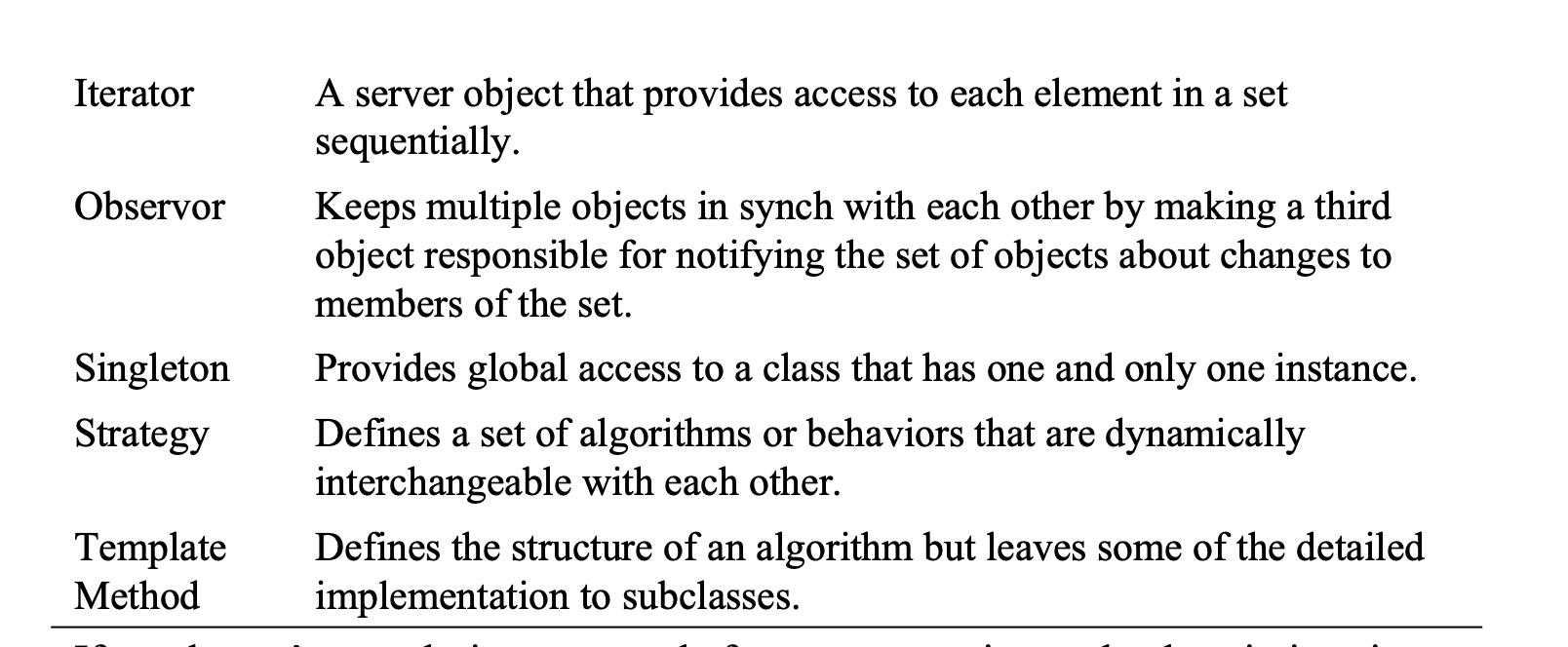

Common patterns include Adapter, Bridge, Decorator, Facade, Factory Method, Observor, Singleton, Strategy, and Template Method.

Other Heuristics:

- Aim for Strong Cohesion -> 一个class内有强凝聚, class之间coupling

- Build Hierarchies

Hierarchies are a useful tool for achieving Software’s Primary Technical Imperative because they allow you to focus on only the level of detail you’re currently concerned with.

The details don’t go away completely; they’re simply pushed to another level so that you can think about them when you want to rather than thinking about all the details all of the time.

- Formalize Class Contracts

Typically, the contract is something like “If you promise to provide data x, y, and z and you promise they’ll have characteristics a, b, and c, I promise to perform operations 1, 2, and 3 within constraints 8, 9, and 10.”

The promises the clients of the class make to the class are typically called “preconditions,” and the promises the object makes to its clients are called the “postconditions.”

- Assign Responsibilities

- Design for Test

- Avoid Failure

The high-profile security lapses of various well-known systems the past few years make it hard to disagree that we should find ways to apply Petroski’s design-failure insights to software.

- Choose Binding Time Consciously

- Make Central Points of Control

Control can be centralized in classes, routines, preprocessor macros, #include files—even a named constant is an example of a central point of control.

- Consider Using Brute Force

A brute-force solution that works is better than an elegant solution that doesn’t work.

- Draw a Diagram

- Keep Your Design Modular

Guidelines for Using Heuristics

- Understanding the Problem.

- Devising a Plan.

- Carrying out the Plan.

- Looking Back.

One of the most effective guidelines is not to get stuck on a single approach.

If diagramming the design in UML isn’t working, write it in English.

Write a short test program.

Try a completely different approach.

Think of a brute-force solution.

Keep outlining and sketching with your pencil, and your brain will follow.

If all else fails, walk away from the problem.

Literally go for a walk, or think about something else before returning to the problem.

If you’ve given it your best and are getting nowhere, putting it out of your mind for a time often produces results more quickly than sheer persistence can.

Top-Down and Bottom-Up Design Approaches

Top-down design begins at a high level of abstraction.

You define base classes or other non-specific design elements.

As you develop the design, you increase the level of detail, identifying derived classes, collaborating classes, and other detailed design elements.

Bottom-up design starts with specifics and works toward generalities It typically begins by identifying concrete objects and then generalizes aggregations of objects and base classes from those specifics.

How far do you decompose a program? Continue decomposing until it seems as if it would be easier to code the next level than to decompose it.

Work until you become somewhat impatient at how obvious and easy the design seems.

If you need to work with something more tangible, try the bottom-up design approach.

Ask yourself, “What do I know this system needs to do?”

You might identify a few low-level responsibilities that you can assign to concrete classes

Ask yourself what you know the system needs to do.

Identify concrete objects and responsibilities from that question.

Identify common objects and group them using subsystem organization, packages, composition within objects, or inheritance, whichever is appropriate.

Continue with the next level up, or go back to the top and try again to work down.

To summarize, top down tends to start simple, but sometimes low-level complexity ripples back to the top, and those ripples can make things more complex than they really needed to be.

Bottom up tends to start complex, but identifying that complexity early on leads to better design of the higher-level classes—if the complexity doesn’t torpedo the whole system first!

Experimental Prototyping

Prototyping works poorly when developers aren’t disciplined about writing the absolute minimum of code needed to answer a question.

Prototyping also works poorly when the design question is not specific enough.

A final risk of prototyping arises when developers do not treat the code as throwaway code.

By adopting the attitude that once the question is answered the code will be thrown away, you can minimize this risk.

Capturing Your Design Work

- Insert design documentation into the code itself

- Capture design discussions and decisions on a Wiki

- Write email summaries

- Use a digital camera

- Save design flipcharts

- Use CRC cards

- Create UML diagrams at appropriate levels of detail

CHECKLIST: Design in Construction

Have you iterated, selecting the best of several attempts rather than the first attempt?

Have you tried decomposing the system in several different ways to see which way will work best?

Have you approached the design problem both from the top down and from the bottom up?

Have you prototyped risky or unfamiliar parts of the system, creating the absolute minimum amount of throwaway code needed to answer specific questions?

Has you design been reviewed, formally or informally, by others?

Have you driven the design to the point that its implementation seems obvious?

Have you captured your design work using an appropriate technique such as a Wiki, email, flipcharts, digital camera, UML, CRC cards, or comments in the code itself?

Does the design adequately address issues that were identified and deferred at the architectural level?

Is the design stratified into layers?

Are you satisfied with the way the program has been decomposed into subsystems, packages, and classes?

Are you satisfied with the way the classes have been decomposed into routines?

Are classes designed for minimal interaction with each other?

Are classes and subsystems designed so that you can use them in other systems?

Will the program be easy to maintain?

Is the design lean? Are all of its parts strictly necessary?

Does the design use standard techniques and avoid exotic, hard-to- understand elements?

Overall, does the design help minimize both accidental and essential complexity?

Working Classes

Good Class Interfaces

The first and probably most important step in creating a high quality class is creating a good interface.

Good Abstraction

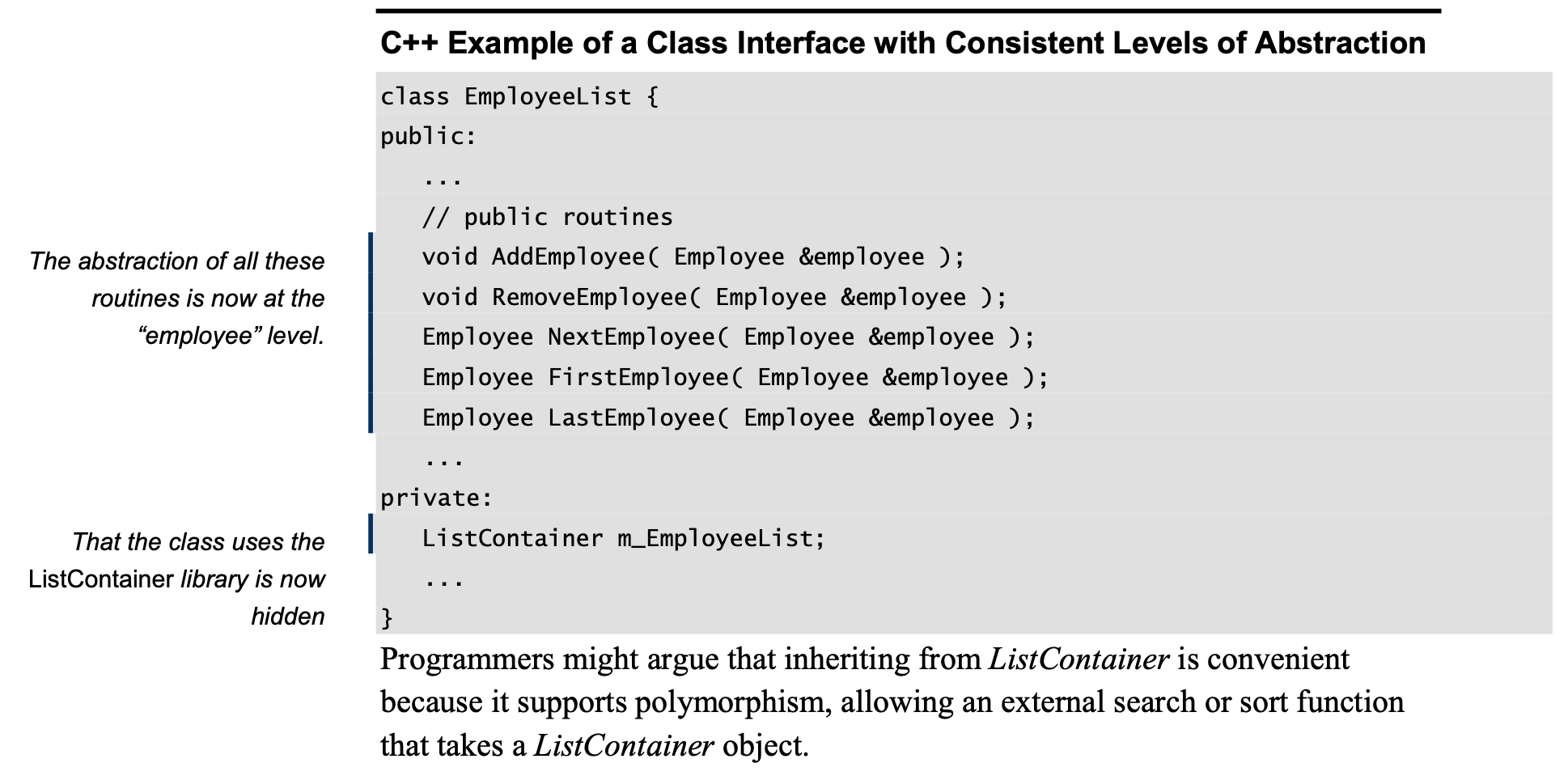

Present a consistent level of abstraction in the class interface.

Each class should implement one and only one abstract data types (ADTs).

Move unrelated information to another class.

Beware of erosion of the interface’s abstraction under modification.

Don’t add public members that are inconsistent with the interface abstraction.

Consider abstraction and cohesion together.

Good Encapsulation

Minimize accessibility of classes and members.

Don’t expose member data in public.

Don’t put private implementation details in a class’s interface.

Don’t make assumptions about the class’s users.

Avoid friend classes.

In a few circumstances such as the State pattern, friend classes can be used in a disciplined way that contributes to managing complexity

Don’t put a routine into the public interface just because it uses only public routines.

Favor read-time convenience to write-time convenience.

Be very, very wary of semantic violations of encapsulation.

Here are some examples of the ways that a user of a class can break encapsulation semantically:

- Not calling Class A’s Initialize() routine because you know that Class A’s PerformFirstOperation() routine calls it automatically.

- Not calling the database.Connect() routine before you call employee.Retrieve( database ) because you know that the employee.Retrieve() function will connect to the database if there isn’t already a connection.

- Not calling Class A’s Terminate() routine because you know that Class A’s PerformFinalOperation() routine has already called it.

- Using a pointer or reference to ObjectB created by ObjectA even after ObjectA has gone out of scope, because you know that ObjectA keeps ObjectB in static storage, and ObjectB will still be valid.

- Using ClassB’s MAXIMUM_ELEMENTS constant instead of using ClassA.MAXIMUM_ELEMENTS, because you know that they’re both equal to the same value.

Watch for coupling that’s too tight.

Design and Implementation Issues

Containment (“has a” relationships)

Implement “has a” through private inheritance as a last resort.

In some instances you might find that you can’t achieve containment through making one object a member of another. In that case, some experts suggest privately inheriting from the contained object.

Be critical of classes that contain more than about seven members.

Inheritance (“is a” relationships)

When you decide to use inheritance, you have to make several decisions:

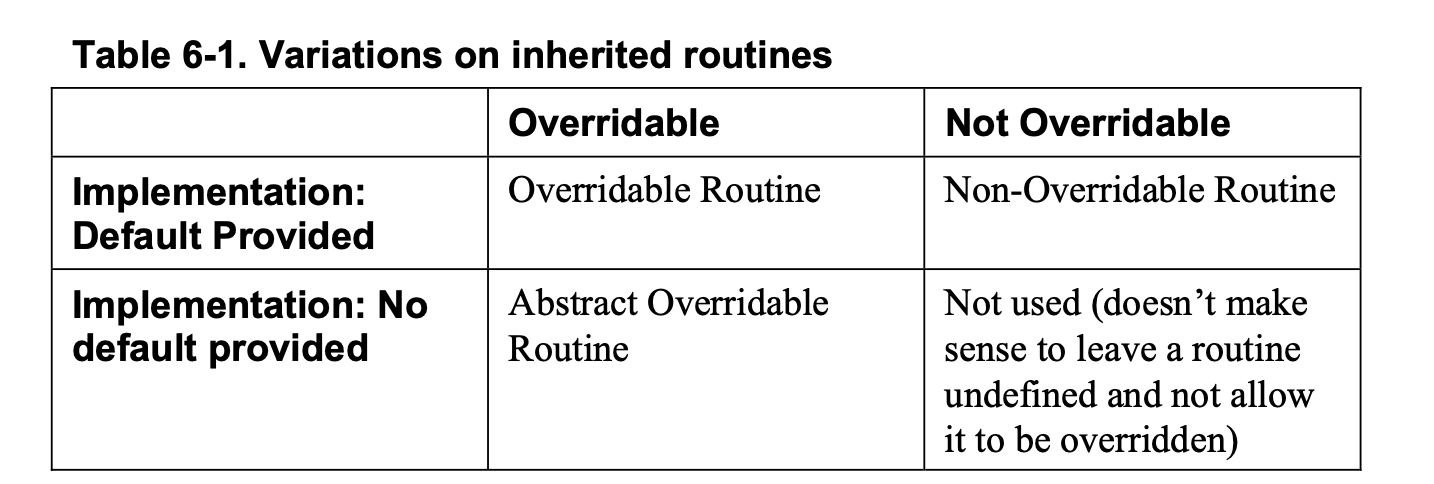

- For each member routine, will the routine be visible to derived classes? Will it have a default implementation? Will the default implementation be overridable?

- For each data member (including variables, named constants, enumerations, and so on), will the data member be visible to derived class?

Implement “is a” through public inheritance.

If the derived class isn’t going to adhere completely to the same interface contract defined by the base class, inheritance is not the right implementation technique.

Design and document for inheritance or prohibit it.

Adhere to the Liskov Substitution Principle.

Be sure to inherit only what you want to inherit.

As the table suggests, inherited routines come in three basic flavors:

- An abstract overridable routine means that the derived class inherits the routine’s interface but not its implementation.

- An overridable routine means that the derived class inherits the routine’s interface and a default implementation, and it is allowed to override the default implementation.

- A non-overridable routine means that the derived class inherits the routine’s interface and its default implementation, and it is not allowed to override the routine’s implementation.

Don’t “override” a non-overridable member function.

If a function is private in the base class, a derived class can create a function with the same name. To the programmer reading the code in the derived class, such a function can create confusion because it looks like it should by polymorphic, but it isn’t; it just has the same name.

Move common interfaces, data, and behavior as high as possible in the inheritance tree.

Be suspicious of classes of which there is only one instance.

Be suspicious of base classes of which there is only one derived class.

Be suspicious of classes that override a routine and do nothing inside the derived routine.

Avoid deep inheritance trees.

In his excellent book Object-Oriented Design Heuristics, Arthur Riel suggests limiting inheritance hierarchies to a maximum of six levels.

In my experience most people have trouble juggling more than two or three levels of inheritance in their brains at once.

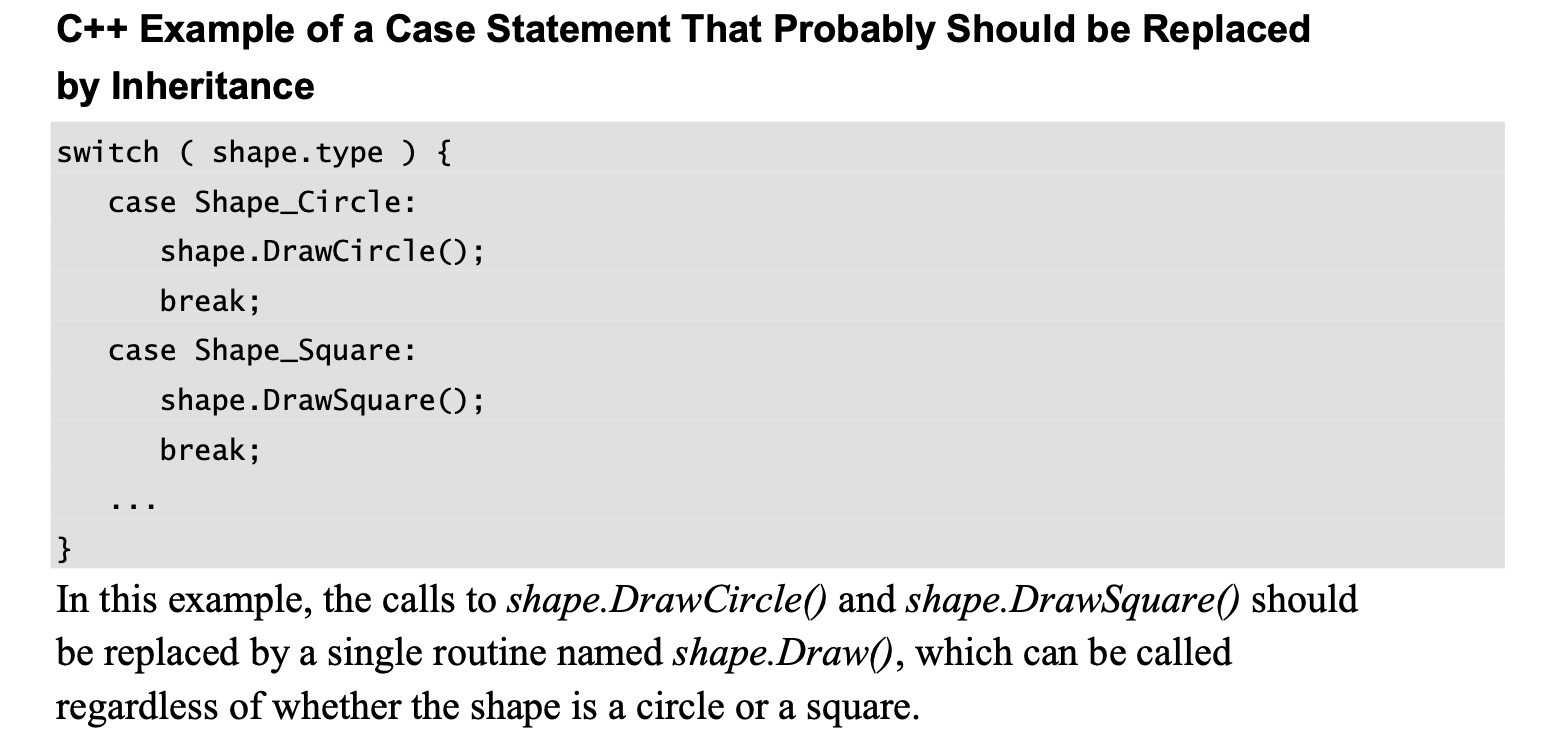

Prefer inheritance to extensive type checking.

Avoid using a base class’s protected data in a derived class (or make that data private instead of protected in the first place).

Member Functions and Data

Keep the number of routines in a class as small as possible.

However, other competing factors were found to be more significant, including deep inheritance trees, large number of routines called by a routine, and strong coupling between classes.

Evaluate the tradeoff between minimizing the number of routines and these other factors.

Disallow implicitly generated member functions and operators you don’t want.

Minimize direct routine calls to other classes.

Minimize indirect routine calls to other classes.

Direct connections are hazardous enough. Indirect connections—such as account.ContactPerson().DaytimeContactInfo().PhoneNumber()—tend to be even more hazardous.

In general, minimize the extent to which a class collaborates with other classes

Try to minimize all of the following:

- Number of kinds of objects instantiated

- Number of different direct routine calls on instantiated objects

- Number of routine calls on objects returned by other instantiated objects

Constructors

Initialize all member data in all constructors, if possible.

Initialize data members in the order in which they’re declared.

Enforce the singleton property by using a private constructor.

Enforce the singleton property by using all static member data and reference counting.

Prefer deep copies to shallow copies until proven otherwise.

Martin Fowler’s Refactoring (1999) describes the specific steps needed to convert from shallow copies to deep copies and from deep copies to shallow copies.

(Fowler calls them reference objects and value objects.)

Reasons to Create a Class

Model real-world objects.

Model abstract objects.

Reduce complexity.

Isolate complexity.

Hide implementation details.

Limit effects of changes.

Hide global data.

Streamline parameter passing.

Make central points of control.

Facilitate reusable code.

Plan for a family of programs.

If you expect a program to be modified, it’s a good idea to isolate the parts that you expect to change by putting them into their own classes.

You can then modify the classes without affecting the rest of the program, or you can put in completely new classes instead.

Package related operations.

To accomplish a specific refactoring.

Classes to Avoid

Avoid creating god classes.

Eliminate irrelevant classes.

Avoid classes named after verbs.

Language-Specific Issues

Here are some of the class-related areas that vary significantly depending on the language:

Behavior of overridden constructors and destructors in an inheritance tree

Behavior of constructors and destructors under exception-handling conditions

Importance of default constructors (constructors with no arguments) Time at which a destructor or finalizer is called

Wisdom of overriding the language’s built-in operators, including assignment and equality

How memory is handled as objects are created and destroyed, or as they are declared and go out of scope

CHECKLIST: Class Quality

Abstract Data Types

- Have you thought of the classes in your program as Abstract Data Types and evaluated their interfaces from that point of view?

Abstraction

- Does the class have a central purpose?

- Is the class well named, and does its name describe its central purpose?

- Does the class’s interface present a consistent abstraction?

- Does the class’s interface make obvious how you should use the class?

- Is the class’s interface abstract enough that you don’t have to think about how its services are implemented? Can you treat the class as a black box?

- Are the class’s services complete enough that other classes don’t have to meddle with its internal data?

- Has unrelated information been moved out of the class?

- Have you thought about subdividing the class into component classes, and have you subdivided it as much as you can?

- Are you preserving the integrity of the class’s interface as you modify the class?

Encapsulation

- Does the class minimize accessibility to its members?

- Does the class avoid exposing member data?

- Does the class hide its implementation details from other classes as much as the programming language permits?

- Does the class avoid making assumptions about its users, including its derived classes?

- Is the class independent of other classes? Is it loosely coupled?

Inheritance

- Is inheritance used only to model “is a” relationships?

- Does the class documentation describe the inheritance strategy?

- Do derived classes adhere to the Liskov Substitution Principle?

- Do derived classes avoid “overriding” non overridable routines?

- Are common interfaces, data, and behavior as high as possible in the inheritance tree?

- Are inheritance trees fairly shallow?

- Are all data members in the base class private rather than protected?

Other Implementation Issues

- Does the class contain about seven data members or fewer?

- Does the class minimize direct and indirect routine calls to other classes?

- Does the class collaborate with other classes only to the extent absolutely necessary?

- Is all member data initialized in the constructor?

- Is the class designed to be used as deep copies rather than shallow copies

unless there’s a measured reason to create shallow copies?

Language-Specific Issues

- Have you investigated the language-specific issues for classes in your specific programming language?

High-Quality Routines

A routine is an individual method or procedure invocable for a single purpose.

Low-Quality Routine:

- The routine has a bad name.

- The routine isn’t documented(Self-Documenting Code).

- The routine has a bad layout.

- The routine’s input variable, inputRec, is changed.

- The routine reads and writes global variables.

- The routine doesn’t have a single purpose.

- The routine doesn’t defend itself against bad data.

- The routine uses several magic numbers.

- The routine uses only two fields of the CORP_DATA type of parameter.

- Some of the routine’s parameters are unused.

- One of the routine’s parameters is mislabeled.

- The routine has too many parameters.

- The routine’s parameters are poorly ordered and are not documented.

Valid Reasons to Create a Routine

Reduce complexity.

Make a section of code readable.

Avoid duplicate code.

Hide sequences.

Hide pointer operations.

Improve portability.

Simplify complicated boolean tests.

Improve performance.

Design at the Routine Level

Functional cohesion is the strongest and best kind of cohesion, occurring when a routine performs one and only one operation.

Examples of highly cohesive routines include sin(), GetCustomerName(), EraseFile(), CalculateLoanPayment(), and AgeFromBirthday().

Of course, this evaluation of their cohesion assumes that the routines do what their names say they do—if they do anything else, they are less cohesive and poorly named.

Several other kinds of cohesion are normally considered to be less than ideal:

Sequential cohesion exists when a routine contains operations that must be performed in a specific order, that share data from step to step, and that don’t make up a complete function when done together.

An example of sequential cohesion is a routine that calculates an employee’s age and time to retirement, given a birth date.

If the routine calculates the age and then uses that result to calculate the employee’s time to retirement, it has sequential cohesion.

Communicational cohesion occurs when operations in a routine make use of the same data and aren’t related in any other way.

Temporal cohesion occurs when operations are combined into a routine because they are all done at the same time.

Some programmers consider temporal cohesion to be unacceptable because it’s sometimes associated with bad programming practices such as having a hodgepodge of code in a Startup() routine.

To avoid this problem, think of temporal routines as organizers of other events.

If a routine has bad cohesion, it’s better to put effort into a rewrite to have better cohesion than investing in a pinpoint diagnosis of the problem.

The unacceptable kinds of cohesion:

Procedural cohesion occurs when operations in a routine are done in a specified order.

The order of these operations is important only because it matches the order in which the user is asked for the data on the input screen.

Logical cohesion occurs when several operations are stuffed into the same routine and one of the operations is selected by a control flag that’s passed in.

It’s called logical cohesion because the control flow or “logic” of the routine is the only thing that ties the operations together—they’re all in a big if statement or case statement together.

Coincidental cohesion occurs when the operations in a routine have no discernible relationship to each other.

Good Routine Names

Describe everything the routine does.

Avoid meaningless or wishy-washy verbs.

Make names of routines as long as necessary.

To name a function, use a description of the return value.

To name a procedure, use a strong verb followed by an object.

Use opposites precisely.

Establish conventions for common operations.

How to Use Routine Parameters

If several routines use similar parameters, put the similar parameters in a consistent order.

Use all the parameters.

Put status or error variables last.

Don’t use routine parameters as working variables.

Document interface assumptions about parameters.

- Whether parameters are input-only, modified, or output-only

- Units of numeric parameters (inches, feet, meters, and so on)

- Meanings of status codes and error values if enumerated types aren’t used

- Ranges of expected values

- Specific values that should never appear

Limit the number of a routine’s parameters to about seven.

Consider an input, modify, and output naming convention for parameters.

You could prefix them with i_, m_, and o_.

If you’re feeling verbose, you could prefix them with Input_, Modify_, and Output_.

Pass the variables or objects that the routine needs to maintain its interface abstraction.

If you find yourself frequently changing the parameter list to the routine, with the parameters coming from the same object each time, that’s an indication that you should be passing the whole object rather than specific elements.

Used named parameters.

Don’t assume anything about the parameter-passing mechanism.

Make sure actual parameters match formal parameters.

Develop the habit of checking types of arguments in parameter lists and heeding compiler warnings about mismatched parameter types.

When to Use a Function and When to Use a Procedure

Purists argue that a function should return only one value, just as a mathematical function does.

This means that a function would take only input parameters and return its only value through the function itself.

A common programming practice is to have a function that operates as a procedure and returns a status value.

if ( report.FormatOutput( formattedReport ) = Success ) then …

Logically, it works as a procedure, but because it returns a value, it’s officially a function.

The use of the return value to indicate the success or failure of the procedure is not confusing if the technique is used consistently.

The alternative is to create a procedure that has a status variable as an explicit parameter, which promotes code like this fragment:

report.FormatOutput( formattedReport, outputStatus )

I prefer the second style of coding, not because I’m hard-nosed about the difference between functions and procedures but because it makes a clear separation between the routine call and the test of the status value.

outputStatus = report.FormatOutput( formattedReport )

if ( outputStatus = Success ) then …

In short, use a function if the primary purpose of the routine is to return the value indicated by the function name. Otherwise, use a procedure.

Setting the Function’s Return Value

Check all possible return paths.

It’s good practice to initialize the return value at the beginning of the function to a default value—which provides a safety net in the event of that the correct return value is not set.

Don’t return references or pointers to local data.

If an object needs to return information about its internal data, it should save the information as class member data.

It should then provide accessor functions that return the values of the member data items rather than references or pointers to local data.

Macro Routines and Inline Routines

Fully parenthesize macro expressions.

1 | #define Cube( a ) a*a*a |

This macro has a problem.

If you pass it nonatomic values for a, it won’t do the multiplication properly.

If you use the expression Cube( x+1 ), it expands to x+1 * x + 1 * x + 1, which, because of the precedence of the multiplication and addition operators, is not what you want.

1 | #define Cube( a ) (a)*(a)*(a) |

This is close, but still no cigar. If you use Cube() in an expression that has operators with higher precedence than multiplication, the (a) * (a) * (a) will be torn apart.

1 | #define Cube( a ) ((a)*(a)*(a)) |

Name macros that expand to code like routines so that they can be replaced by routines if necessary.

Limitations on the Use of Macro Routines

Modern languages like C++ provide numerous alternatives to the use of macros:

- const for declaring constant values

- inline for defining functions that will be compiled as inline code

- template for defining standard operations like min, max, and so on in a type- safe way

- enum for defining enumerated types

- typedef for defining simple type substitutions

CHECKLIST: High-Quality Routines

Big-Picture Issues

- Is the reason for creating the routine sufficient?

- Have all parts of the routine that would benefit from being put into routines of their own been put into routines of their own?

- Is the routine’s name a strong, clear verb-plus-object name for a procedure or a description of the return value for a function?

- Does the routine’s name describe everything the routine does? Have you established naming conventions for common operations?

- Does the routine have strong, functional cohesion—doing one and only one thing and doing it well?

- Do the routines have loose coupling—are the routine’s connections to other routines small, intimate, visible, and flexible?

- Is the length of the routine determined naturally by its function and logic, rather than by an artificial coding standard?

Parameter-Passing Issues

- Does the routine’s parameter list, taken as a whole, present a consistent interface abstraction?

- Are the routine’s parameters in a sensible order, including matching the order of parameters in similar routines?

- Are interface assumptions documented?

- Does the routine have seven or fewer parameters?

- Is each input parameter used?

- Is each output parameter used?

- Does the routine avoid using input parameters as working variables?

- If the routine is a function, does it return a valid value under all possible circumstances?

Defensive Programming

Protecting Your Program From Invalid Inputs

Check the values of all data from external sources.

If you’re working on a secure applica- tion, be especially leery of data that might attack your system: attempted buffer overflows, injected SQL commands, injected html or XML code, integer over- flows, and so on.

Check the values of all routine input parameters.

Decide how to handle bad inputs.

Assertions

An assertion is code that’s used during development—usually a routine or macro—that allows a program to check itself as it runs.

When an assertion is true, that means everything is operating as expected.

When it’s false, that means it has detected an unexpected error in the code.

An assertion usually takes two arguments: a boolean expression that describes the assumption that’s supposed to be true and a message to display if it isn’t.

Guidelines for Using Assertions

Use error handling code for conditions you expect to occur; use assertions for conditions that should never occur.

Error-handling typically checks for bad input data; assertions check for bugs in the code.

A good way to think of assertions is as executable documentation—you can’t rely on them to make the code work, but they can document assumptions more actively than program-language comments can.

Avoid putting executable code in assertions.

Visual Basic Example of a Dangerous Use of an Assertion

1 | Debug.Assert( PerformAction() ) ' Couldn't perform action |

Put executable statements on their own lines, assign the results to status variables, and test the status variables instead.

Visual Basic Example of a Safe Use of an Assertion

1 | actionPerformed = PerformAction() |

Use assertions to document preconditions and postconditions.

Preconditions are the properties that the client code of a routine or class prom- ises will be true before it calls the routine or instantiates the object.

Preconditions are the client code’s obligations to the code it calls.

Postconditions are the properties that the routine or class promises will be true when it concludes executing.

Postconditions are the routine or class’s obligations to the code that uses it.

For highly robust code, assert, and then handle the error anyway.

Error Handling Techniques

Return a neutral value.

Substitute the next piece of valid data.

Return the same answer as the previous time.

Substitute the closest legal value.

Log a warning message to a file.

Return an error code.

Call an error processing routine/object.

Display an error message wherever the error is encountered.

Handle the error in whatever way works best locally.

Shutdown.

Some systems shut down whenever they detect an error. This approach is useful in safety critical applications.

Robustness vs. Correctness

Correctness means never returning an inaccurate result; no result is better than an inaccurate result. Robustness means always trying to do something that will allow the software to keep operating, even if that leads to results that are inaccurate sometimes.

Safety critical applications tend to favor correctness to robustness.

Consumer applications tend to favor robustness to correctness.

Exceptions

Use exceptions to notify other parts of the program about errors that should not be ignored.

Throw an exception only for conditions that are truly exceptional.

in other words, conditions that cannot be addressed by other coding practices.

Don’t use an exception to pass the buck.

Avoid throwing exceptions in constructors and destructors unless you catch them in the same place.

Throw exceptions at the right level of abstraction.

Include all information that led to the exception in the exception message.

Avoid empty catch blocks.

Know the exceptions your library code throws.

Consider building a centralized exception reporter.

1 | Sub ReportException( _ |

1 | Try ... |

Standardize your project’s use of exceptions.

- If you’re working in a language like C++ that allows you to throw a variety of kinds of objects, data, and pointers, standardize on what specifically you will throw.

For compatibility with other languages, consider throwing only objects derived from the Exception base class.

- Define the specific circumstances under which code is allowed to use throw- catch syntax to perform error processing locally.

- Define the specific circumstances under which code is allowed to throw an exception that won’t be handled locally.

- Determine whether a centralized exception reporter will be used.

- Define whether exceptions are allowed in constructors and destructors.

Consider alternatives to exceptions.

You should always consider the full set of error-handling alternatives: handling the error locally, propagating the error using an error code, logging debug information to a file, shutting down the system, or using some other approach.

Barricade Your Program to Contain the Damage Caused by Errors

One way to barricade for defensive programming purposes is to designate certain interfaces as boundaries to “safe” areas.

This same approach can be used at the class level. The class’s public methods assume the data is unsafe, and they are responsible for checking the data and sanitizing it.

Once the data has been accepted by the class’s public methods, the class’s private methods can assume the data is safe.

Another way of thinking about this approach is as an operating-room technique.

Data is sterilized before it’s allowed to enter the operating room. Anything that’s in the operating room is assumed to be safe.

The key design decision is deciding what to put in the operating room, what to keep out, and where to put the doors—which routines are considered to be inside the safety zone, which are outside, and which sanitize the data.

The easiest way to do this is usually by sanitizing external data as it arrives, but data often needs to be sanitized at more than one level, so multiple levels of sterilization are sometimes required.

Convert input data to the proper type at input time.

Debugging Aids

Don’t Automatically Apply Production Constraints to the Development Version.

A common programmer blind spot is the assumption that limitations of the pro- duction software apply to the development version.

The production version has to run fast. The development version might be able to run slow.

The production version has to be stingy with resources. The development version might be al- lowed to use resources extravagantly.

The production version shouldn’t expose dangerous operations to the user. The development version can have extra opera- tions that you can use without a safety net.

Use Offensive Programming

Exceptional cases should be handled in a way that makes them obvious during development and recoverable when production code is running.

Here are some ways you can program offensively:

- Make sure asserts abort the program. Don’t allow programmers to get into the habit of just hitting the ENTER key to bypass a known problem. Make the problem painful enough that it will be fixed.

- Completely fill any memory allocated so that you can detect memory alloca- tion errors.

- Completely fill any files or streams allocated to flush out any file-format errors.

- Be sure the code in each case statement’s else clause fails hard (aborts the program) or is otherwise impossible to overlook.

- Fill an object with junk data just before it’s deleted

Plan to Remove Debugging Aids

If you’re writing code for commercial use, the performance penalty in size and speed can be prohibitive.

Use version control and build tools like make.

Use a built-in preprocessor.

1 | #define DEBUG |

Other debug code might be for specific purposes only, so you can surround it by a statement like #if DEBUG == POINTER_ERROR.

In other places, you might want to set debug levels, so you could have statements like #if DEBUG > LEVEL_A.

Write your own preprocessor.

Use debugging stubs.

C++ Example of a Routine for Checking Pointers During Development

1 | void CheckPointer( void *pointer ) { |

C++ Example of a Routine for Checking Pointers During Production

1 | void CheckPointer( void *pointer ) { |

Determining How Much Defensive Pro- gramming to Leave in Production Code

Remove code that results in hard crashes.

Leave in code that helps the program crash gracefully.

Log errors for your technical support personnel.

See that the error messages you leave in are friendly.

CHECKLIST: Defensive Programming

General

- Does the routine protect itself from bad input data?

- Have you used assertions to document assumptions, including preconditions and postconditions?

- Have assertions been used only to document conditions that should never occur?

- Does the architecture or high-level design specify a specific set of error han- dling techniques?

- Does the architecture or high-level design specify whether error handling should favor robustness or correctness?

- Have barricades been created to contain the damaging effect of errors and reduce the amount of code that has to be concerned about error processing?

- Have debugging aids been used in the code?

- Has information hiding been used to contain the effects of changes so that they won’t affect code outside the routine or class that’s changed?

- Have debugging aids been installed in such a way that they can be activated or deactivated without a great deal of fuss?

- Is the amount of defensive programming code appropriate—neither too much nor too little?

- Have you used offensive programming techniques to make errors difficult to overlook during development?

Exceptions

- Has your project defined a standardized approach to exception handling?

- Have you considered alternatives to using an exception?

- Is the error handled locally rather than throwing a non-local exception if possible?